pyspark dataframe flatmap nested json stream Question by DarshilD Jun 17. If this iterable collection is shorter than that, thisElem values are used to pad the result. Scala and PySpark, Contains() function, like function, rlike function. The length of the returned collection is the maximum of the lengths of this iterable collection and that. AttributesĪ new collection of type That containing pairs consisting of corresponding elements of this iterable collection and that. The element to be used to fill up the result if this iterable collection is shorter than that. This one is still fun, as you know, the main topic is flatMap but you are. The element to be used to fill up the result if that is shorter than this iterable collection. I want to introduce you slowly to more complex problems. The iterable providing the second half of each result pair Scala flatMap FAQ: Can you share some Scala flatMap examples with lists and other sequences. Effect systems: beyond atmap Scala Meetup Brabant + Amsterdam Ordina For our. If one of the two collections is shorter than the other, placeholder elements are used to extend the shorter collection to the length of the longer. The slides of my presentation of June 5th 2015 for Scala Amsterdam. Returns a iterable collection formed from this iterable collection and another iterable collection by combining corresponding elements in pairs. First, all the elements of the list were displayed on the terminal.

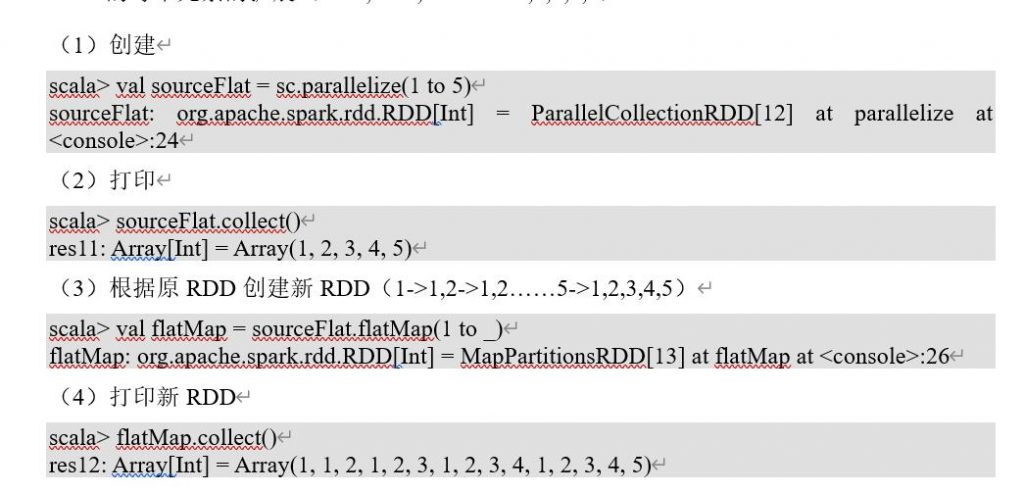

Now, it is time to discuss the output of this script which is shown in the image below.

Then, for running this executable file, we used the following command: scala FlatMap. For Steppers marked with, the converters in allow creating parallel streams, whereas bare Steppers can be converted only to sequential streams. To make an executable file for this Scala script, we used the command given below: scalac FlatMap.Scala.

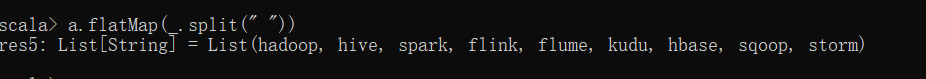

Note that this method is overridden in subclasses and the return type is refined to S with EfficientSplit, for example . Scala has the trait IterableA that defines. The implicit parameter defines the resulting Stepper type according to the element type of this collection.įor collections of Int, Short, Byte or Char, an is returnedįor collections of Double or Float, a is returnedįor collections of Long a is returnedįor any other element type, an is returned For collections holding primitive values, the Stepper can be used as an iterator which doesn't box the elements. The function flatMap() is one of the most popular functions in Scala. The Stepper enables creating a Java stream to operate on the collection, see. Map Transformation-2 val tag = data.Returns a for the elements of this collection. Map Transformation-1 val newData = data.map (line => line.toUpperCase() )Ībove the map, a transformation will convert each and every record of RDD to upper case. Example of using flatMap for converting a List of words into separate records. Follow this guide to learn more ways to create RDDsin Apache Spark. Private: AWS EMR and Spark 2 using Scala RDD Operations Transformations. Method Definition: def filter (p: ( (A, B))> Boolean): Map A, B Return Type: It returns a new map consisting all the elements of the map which satisfies the given predicate. To make it possible, methods (filter, flatMap.) are implemented in a way where the head is not being evaluated if is not explicitly indicated. Maybe I dont understand some fundamentals, but what is the signature of flatMap in general Lets imagine Id like to implement type T. The filter () method is utilized to select all elements of the map which satisfies a stated predicate. Map Transformation Scala ExampleĬreate RDD val data = ("INPUT-PATH").rddĪbove statement will create an RDD with name data. In Scala 2.13, LazyList (previously Stream) became fully lazy from head to tail. For instance: scala> List(1, 2, 3) map ( + 1) res29: ListInt List(2. Yes, like options and lists, TryA is an algebraic data type, so it can be decomposed using pattern matching. def flatMap B (f: A > Option B): Option B both the methods above are final keeping that aside we see that signatures of both the methods looks pretty. Apache Spark map transformation operation a. Mapping over lists: map, flatMap and foreach The operation xs map f takes as. map () and flatMap on Option +A So if we look at the signatures of map () and flatMap () methods in class Option they look like this: xxxxxxxxxx.

0 kommentar(er)

0 kommentar(er)